Artificial Intelligence Survey

Scientists’ Views on Regulation

Section 03

Survey Overview and Demographics

General Overview

Study Date: 29.09.23–04.11.23

Geographic Coverage: United States

Expertise:

- 25% Biology

- 22.9% Civil and Environmental Engineering

- 14% Geography

- 8.5% Public Health

- 16.1% Chemistry

- 13.6% Computer and Information Science

Response Overview

Sample Size: 777

Valid Responses: 232

Response Rate: 29.9

Date initial findings posted: 12.12.23

Most recent update: 12.12.23

Days survey in field: 37

Average response time: 7.4

Survey Demographics

Respondent Demographics:

- 35.2% Female

- 64.4% Male

- 100% Academic

- 0% Industry

Language(s): English

Section Overview

This section reports on scientists’ opinions on the regulation of generative AI for general use and in academic settings. We designed an experiment to examine question wording and moderate response option effects on scientists’ opinions about government regulation of generative AI.

Question

Would you favor or oppose having a federal agency regulate the use of generative AI similar to how the FDA (Food and Drug Administration) regulates the approval of drugs and medical devices? (N=231)

Finding

Nearly three-quarters (71%) of scientists strongly or somewhat support federal agency regulation of the use of generative AI. Only 15% strongly or somewhat oppose federal agency regulation, and 14% neither support nor oppose federal agency regulation of generative AI.

Experiment

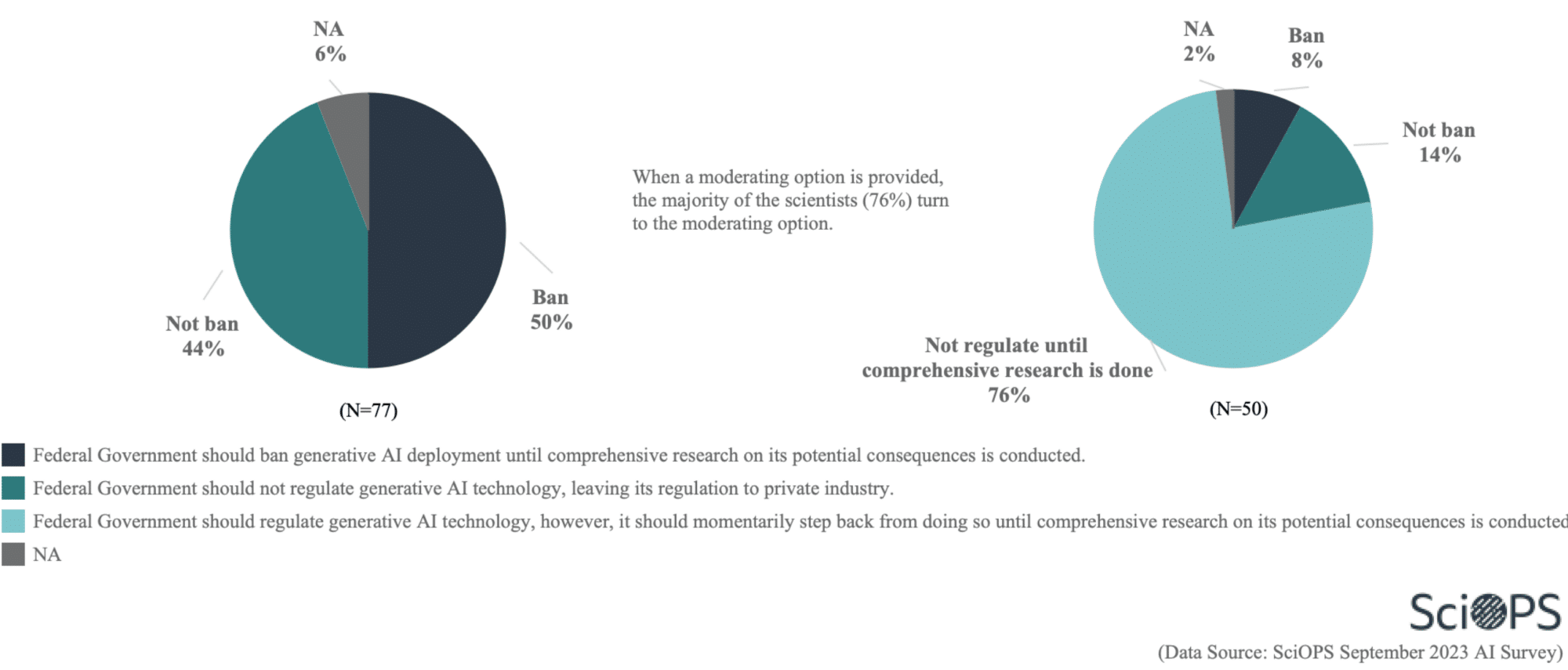

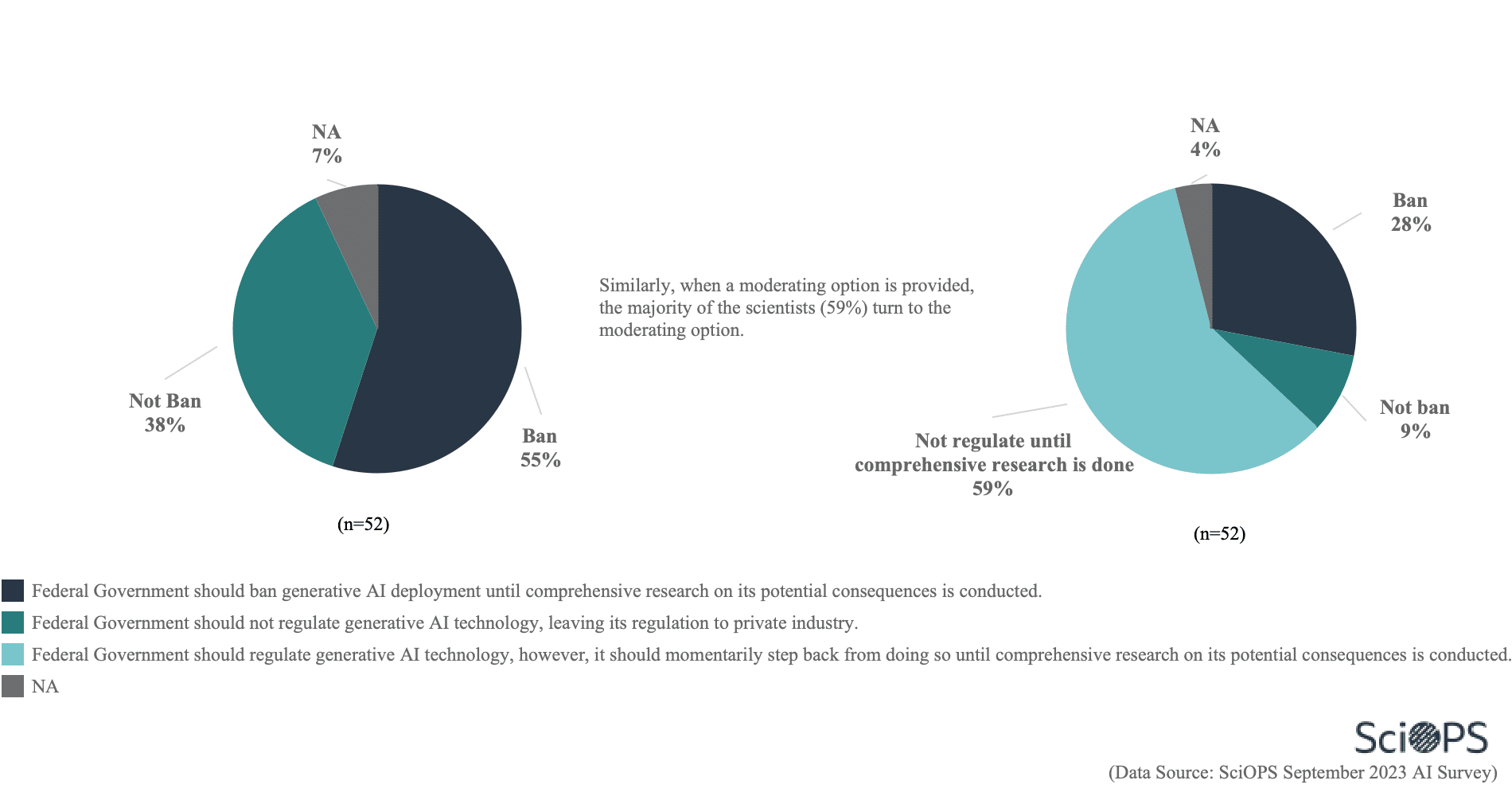

We designed an experiment for a policy question asking about scientists’ attitude toward government regulation of generative AI to examine the effects of both question wording and the provision of a middle response option. Specifically, we examined question versions asking about regulation of AI that (a) did and did not mention ethical and unintended consequences of AI, and (b) did and did not provide for a middle response category between outright banning vs. not banning generative AI technology. Respondents were randomly assigned to be asked one of these four versions of this question.

Question

Which of the following is closest to your current point of view about the role of the government in regulating generative AI? (Version 1)

Finding

The graphs on the left shows responses of those that did not have a moderate answer choice. The graphs on the right shows responses of those that did have a moderate choice.

Question

Considering the possible ethical and unintended consequences generative AI may have, which of the following is closest to your current point of view about the role of the government in regulating generative AI? (Version 2)

Finding

Overall, we find that when provided with a response option presenting a middle alternative regarding the extent to which the government should regulate generative AI technology for both question versions, the majority of scientists would endorse the middle alternative (76% and 59% for question versions 1 & 2, respectively) and the proportion in support of banning AI technology is reduced from 50% to 8% and from 55% to 28% in question versions 1 & 2 respectively. Likewise, support for not banning AI technology is also reduced (from 44% to 14% and from 38% to 9%, respectively, in the two question versions) when a middle option between outright banning vs. not banning AI is provided.

When the possible threats of generative AI are specifically mentioned (question version 2), more scientists approved the Federal Government banning generative AI deployment until comprehensive research on its potential consequences can be conducted, regardless of the provision of a middle response option: (50% to 55% when no middle option is provided, and 8% to 28% when a middle option is provided).

Additionally, scientists were somewhat more likely to avoid answering the question when not provided with the more moderate middle response option (from 2% to 6%, and from 4% to 7% across question versions 1 & 2, respectively).

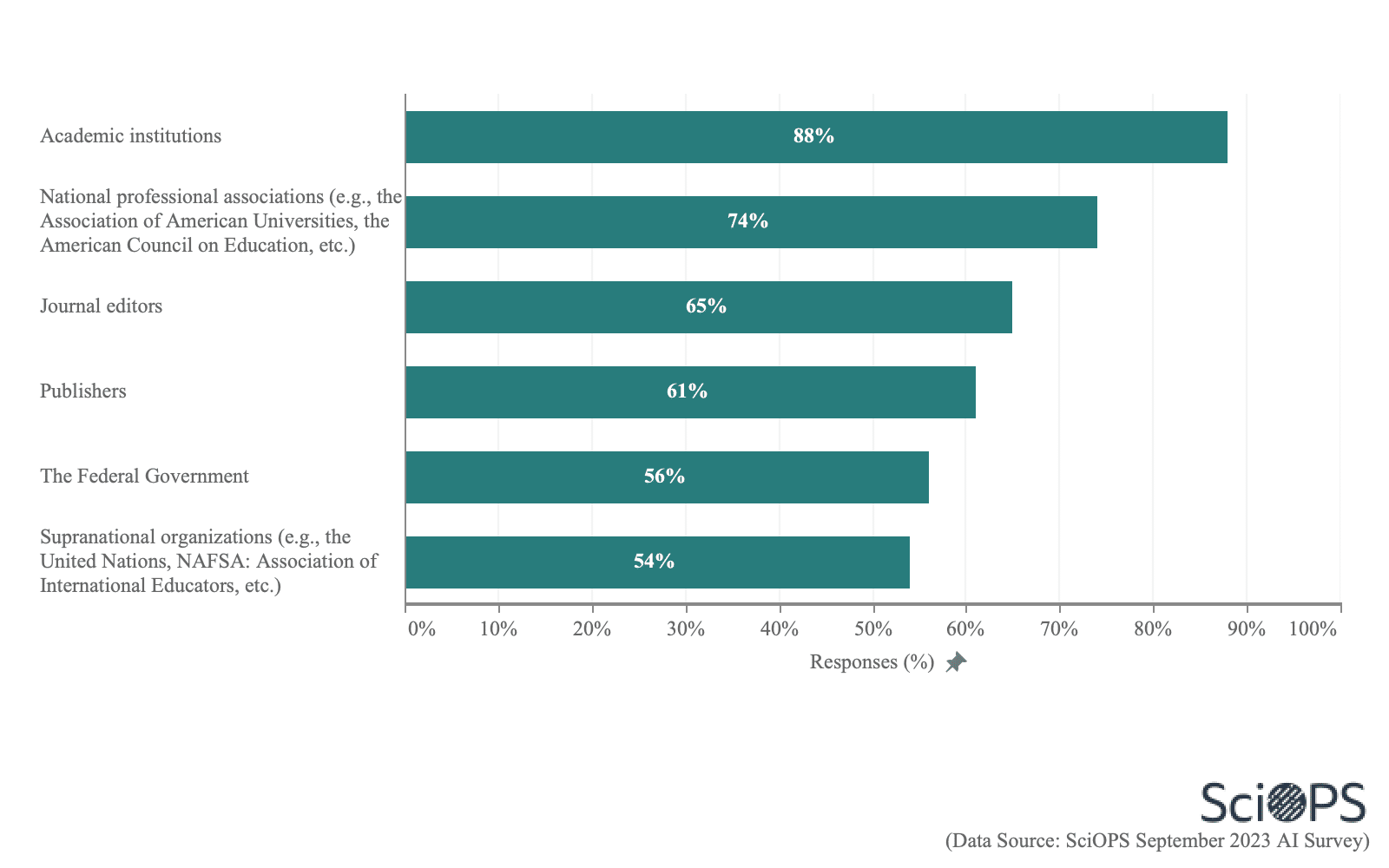

Question

In your opinion, which of the following, if any, should have primary responsibility for regulating generative AI in academic settings? (N=222)

Finding

The majority of respondents reported that academic institutions (88%), national professional associations (74%), journal editors (65%) and publishers (61%) should have primary responsibility for regulating generative AI in academic settings. Fewer scientists believe that supranational organizations (54%) and the federal government (56%) should have primary responsibility for regulating generative AI in academic settings.

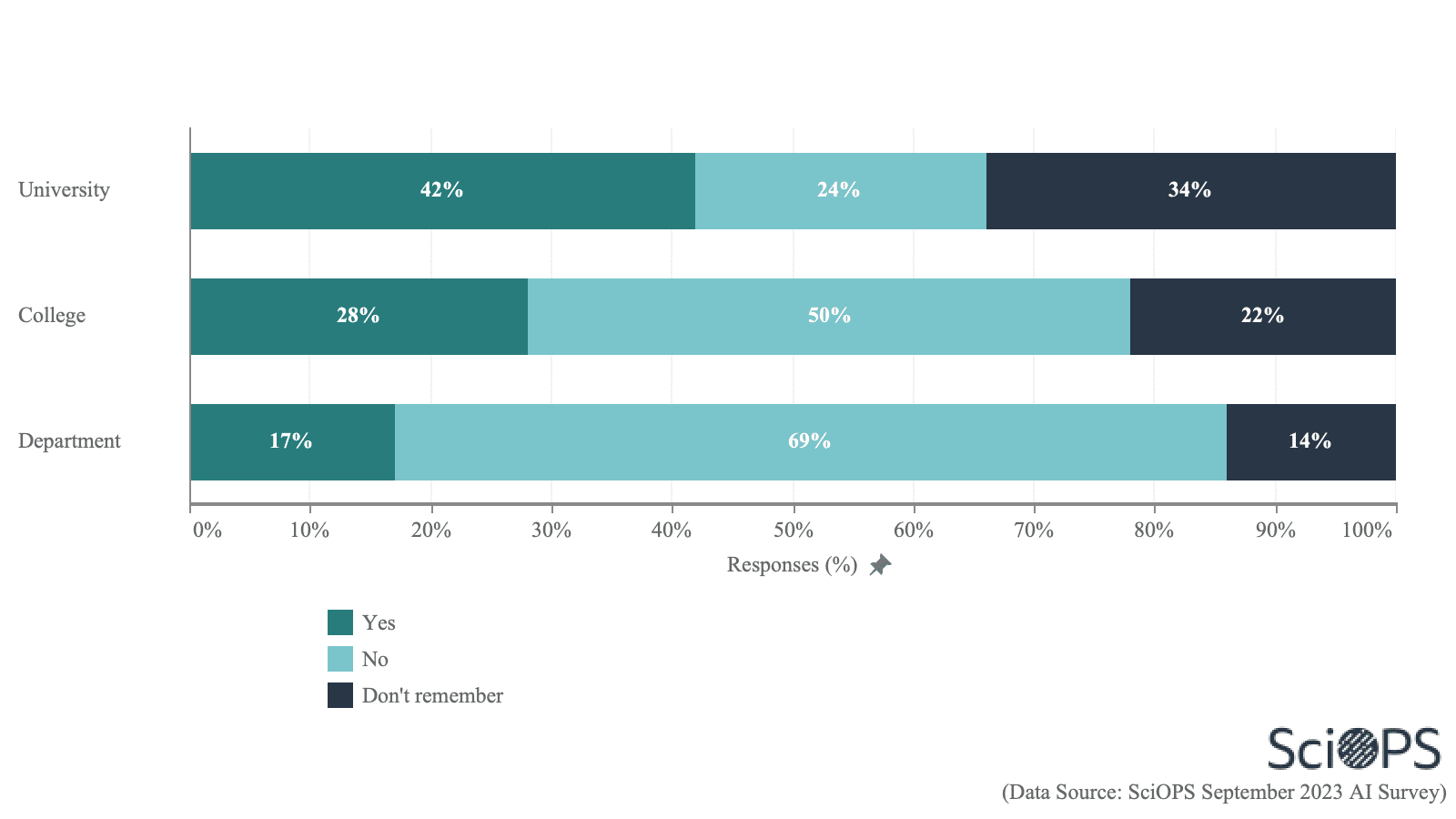

Question

Are there any steps or guidelines regarding the responsible use of ChatGPT or similar generative AI language models now in place in your: (N=227)

Finding

Less than half scientists (42%) reported that there are guidelines in place in their university on the responsible use of generative AI. Less than one third (28%) of respondents reported there are guidelines at college level. Only 17% of respondents reported that their departments have guidelines on the responsible use of generative AI. Among those who reported that there are department-level guidelines on generative AI (n=28), most (65%) reported that the guidelines place restrictions only on students’ use of generative AI, while one-third (34%) reported restrictions on both faculty and students. Only 1% reported that their department guidelines placed restrictions only on faculty use of generative AI.

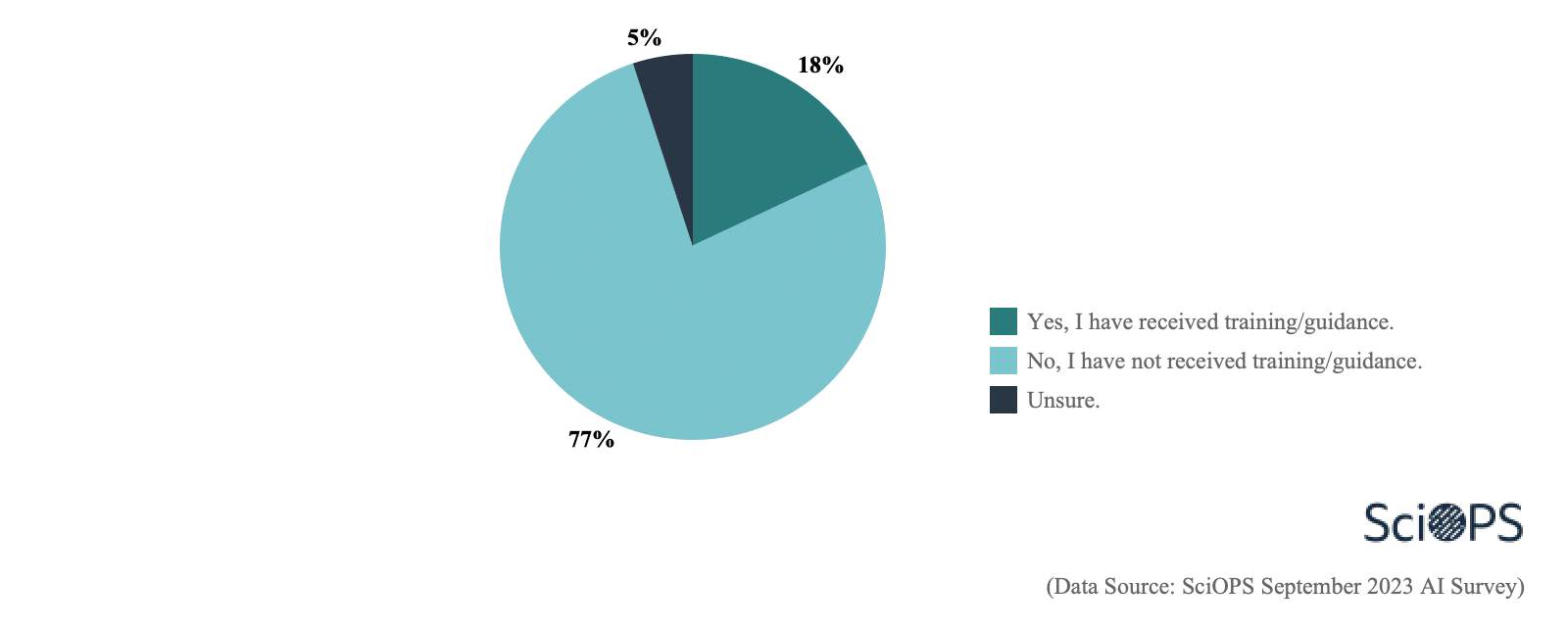

Question

Have you received any training or guidance on the ethical considerations associated with using generative AI in an educational or research context offered by your university? (N=227)

Finding

Most scientists (77%) reported that they have not received training or guidance from their universities on the ethical considerations associated with using generative AI in an educational or research context.

This national survey of academic scientists in the US was conducted by the Center for Science, Technology and Environmental Policy Studies (CSTEPS) at Arizona State University. The survey was approved by the Institutional Review Boards at Arizona State University.

The sample for this survey was selected from our SciOPS panel. The SciOPS panel was recruited from a random sample of PhD-level faculty in six fields of science. Contact information of faculty in the fields of biology, geography, civil and environmental engineering, chemistry, and computer and information science and engineering was collected from randomly selected Carnegie-designated Research Extensive and Intensive (R1) universities in the United States (US).

Contact information for academic scientists, social scientists and engineers in the field of public health was collected from all Council on Education for Public Health (CEPH) accredited public health schools. The full sample frame for recruiting the SciOPS panel includes contact information for 18,505 faculty members of which 1,366 agreed to join the SciOPS panel. This represents an AAPOR recruitment rate (RECR) of 7.5% (RR4).

Sample weighting and precision: The sample of respondents for this survey was weighted by the inverse of selection probabilities and post-stratified probabilities by gender, academic fields, and academic ranks to represent the full sample frame for recruiting SciOPS panel members as closely as possible. A conservative measure of sampling error for questions answered by the sample of respondents is plus or minus 6 percentage points